2026 guide to ad creative testing with AI: What to test, how to scale

Not sure what to test or how to scale winning ads? Zeely AI worked across multiple industries to identify the proven methods for ad creative testing with AI that boost results.

Ad creative testing takes the mystery out of advertising. Instead of relying on hunches, it gives you proof of what actually works. From Meta Ads to TikTok, ad creative testing turns guesswork into a repeatable system that scales across markets, whether you’re running campaigns in the U.S. or worldwide.

What is ad creative testing?

Ad creative testing is the structured process of comparing ad variations to identify what drives measurable performance: clicks, conversions, or lower CPAs. Instead of relying on creative hunches, testing provides a repeatable way to validate what works, across every stage of your campaign.

On platforms like Meta Ads, TikTok, and Instagram, where user behavior shifts fast, creative testing acts as a live feedback loop. You can test headlines, visuals, formats, or calls to action and let real results guide your spend.

For those starting out, this guide explains ad creative testing for beginners: what to test, how long to run it, and how to scale winners.

According to Harvard Business Review, organizations that embrace experimentation as part of their culture see stronger returns because data-backed decisions compound over time.

6 benefits of ad creative testing

Ad creative testing is how campaigns stop wasting budget and start compounding results. Each test produces proof you can act on, and over time those proofs stack into a system that consistently delivers higher returns. Here’s what you gain when creative testing becomes part of your workflow.

1. Higher Click-Through Rates (CTR)

Testing helps you discover which hooks, visuals, and CTAs actually earn attention. A single winning variation can lift CTR by 15–30%, which widens reach without increasing spend. On Meta Ads, testing the first three seconds of silent autoplay video often delivers double-digit CTR lifts, because captions catch what sound can’t. When more people click, your campaigns scale further with the same budget.

2. Lower Cost per Acquisition (CPA)

By running controlled tests, you can cut underperforming creatives before they drain budget. Adobe’s 2025 Digital Trends Report found 53% of executives now cite efficiency gains from AI-driven optimization and creative testing is where those gains first appear. For example, e-commerce brands that tested product-focused thumbnails against lifestyle shots often reported CPAs dropping by 12–18% once the right creative was scaled.

3. Increased Return on Ad Spend (ROAS)

Scaling winners and retiring losers means revenue grows faster than costs. Testing directly feeds ROAS because each new creative iteration builds on the last. On TikTok, advertisers who tested UGC-style “pattern interrupt” intros against polished branded clips found that authentic creative lifted ROAS by up to 1.5x. The data doesn’t just improve today’s campaign, it builds confidence in every dollar you spend tomorrow.

4. Stronger conversion rates

Clicks don’t matter unless they convert. Creative testing shows which messaging and design elements drive people from interest to action. Even a 1–2% lift in conversion rate at the ad level compounds into significant revenue gains. For instance, Instagram carousel ads that tested early product reveals against later reveals often converted 8–10% better because the offer was clear upfront. Small shifts in creative placement can ripple through the funnel.

5. Confident scaling of winning ads

Testing gives you the evidence to put more budget behind proven ads with less hesitation. Instead of guessing, you expand spend on ads already validated by data. In one SaaS campaign, split-testing value-prop headlines revealed a 22% performance gap; the winning creative was safely scaled to 5x budget without efficiency loss. This kind of confidence makes growth less of a gamble.

6. Compounding efficiency over time

Each test is more than a result, it’s a data point in your creative playbook. Over time, these learnings reduce fatigue, guide smarter iterations, and prevent the constant reinvention that drains budgets. The above mentioned Adobe report claims 65% of senior leaders now cite predictive analytics and experimentation as primary growth drivers. Creative testing is the front door to that future: proof-driven, AI-enhanced, and scalable.

Types of ad creative testing

Testing isn’t one-size-fits-all. Each framework produces different kinds of truth about your ads. Optimizely’s 2025 analysis of 127,000 experiments showed that aligning method to context delivers faster learnings and stronger creative decisions. Here’s how to choose.

A/B testing

The simplest format: one ad against another.

- Best fit: Small to mid-sized campaigns. Perfect for quick calls like headline vs. headline or CTA vs. CTA

- Where it shines: Meta Ads, where the native A/B tool makes setup straightforward

- Watch for: Ending too early. Small samples create false winners and wasted spend

- Pro insight: A/B still makes up over 80% of industry tests because it works at lower traffic levels. If you’re under 10,000 impressions per variant, this is the method that gives you clarity without burning budget

Multivariate testing

Tests several creative elements at once to see how they interact.

- Best fit: High-traffic campaigns with 50,000+ impressions per combination

- Where it shines: TikTok and Instagram, where captions, visuals, and hooks overlap to shape results

- Watch for: Complexity. Without enough impressions, you get noise instead of signal

- Pro insight: MVT is the only way to see how variables combine. If you want to know whether captions only boost CTR when paired with UGC intros, MVT uncovers that — A/B never will

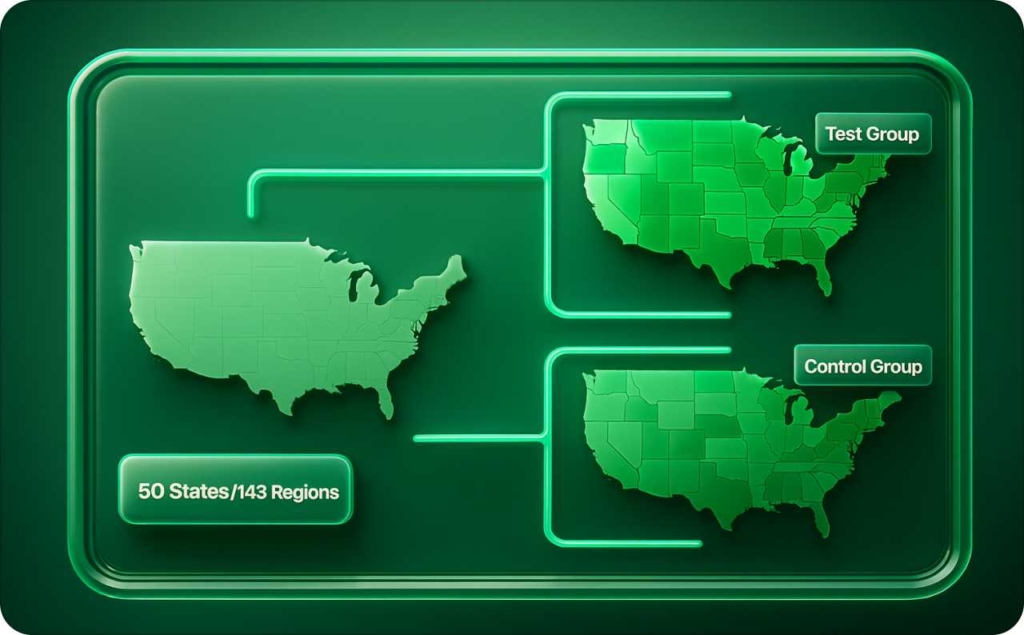

Split / Geo testing

Splits audiences by geography or audience segment to compare complete creative bundles.

- Best fit: Market launches or platform-wide creative packages

- Where it shines: Google Performance Max or YouTube, where geo targeting is baked in

- Watch for: Cultural and audience differences. If markets don’t behave similarly, results reflect geography, not creative

- Pro insight: Use geo tests to validate creative direction in comparable regions. For example, a SaaS brand found “compliance-first” ads doubled conversion rates in the U.K. — insight that justified tailoring creative by market

Bandit testing

Adaptive testing that reallocates traffic to stronger creatives during the test.

- Best fit: Short-lived campaigns like seasonal sales or product drops

- Where it shines: E-commerce promos on Meta or TikTok where time pressure is high

- Watch for: Trade-offs. Bandit tests optimize in-flight but won’t give you clean statistical winners

- Pro insight: Bandits maximize efficiency under pressure. Use them to protect revenue during fast-moving promos, then validate learnings later with A/B or sequential tests

Sequential / Ladder testing

Runs experiments in structured stages — start broad, then refine.

- Best fit: Brands building a long-term creative playbook

- Where it shines: B2B, SaaS, or high-ticket campaigns where insights must compound over months

- Watch for: Over-rigidity. Moving too slowly risks missing live opportunities

- Pro insight: Always ladder your tests: start with a message, then format, then design. Big swings first, refinements last. This sequence saves budget and prevents wasted cycles on details that don’t move the needle

Ad creatives variables with examples

Testing isolates levers so you know where the gains are coming from, and which ones are worth scaling. Think with Google’s Cannes 2025 report showed this clearly: one AI-powered campaign achieved a +72% conversion rate lift by systematically testing creative elements, not just channels.

Headline & hook

The hook sets your ceiling. Strong openers can double CTR; weak ones stop ads before they start.

Example: On Meta Ads, urgency-driven hooks like “Shop the Drop Today” beat descriptive lines by 15–25% in cold traffic. But in retargeting, descriptive hooks (“Your summer wardrobe, sorted”) often convert warmer leads at a lower CPA.

Pro insight: Test hooks at the top of funnel for scroll-stopping power. Refine CTAs and offers later, once traffic is warmed up.

Visuals & thumbnails

People judge your ad in milliseconds. Thumbnails and visuals create the first impression that decides whether they stay or scroll.

Example: A beauty brand tested close-up product shots against creator-led demos on TikTok. UGC-style demos lifted watch-through rates by 1.4x, especially when paired with captions.

Pro insight: Visuals interact with hooks. Build a matrix (hook × visual) to see if your best headline only wins when matched with a certain image style.

Format & layout

Carousel, single image, short-form vertical, or long-form video — format dictates both attention span and storytelling pace.

Example: An e-commerce retailer ran Instagram carousels against Reels. Reels outperformed with a 22% conversion lift, driven by early product reveals.

Pro insight: Formats test narrative timing. Use Reels/Shorts for early reveals and urgency; carousels for education or bundles. Don’t just test the look, test the sequence.

Call to Action

The last three words can swing CPAs. A small shift reframes the entire offer.

Example: A SaaS advertiser on Meta Ads tested “Start free trial” vs. “Book a demo.” “Start free trial” reduced CPA by 14%, lowering friction for colder leads.

Pro insight: CTAs are low-leverage in awareness but high-leverage in retargeting. Don’t waste impressions testing CTAs at the top of the funnel focus there when intent is already primed.

Offer & incentive

Discounts, bundles, free trials — the right incentive shapes perceived value.

Example: A subscription box tested “10% off” vs. “First box free.” The free box drove a 28% lift in conversions but hurt ROAS due to upfront cost.

Pro insight: Incentives should be tested with lifetime value in mind. An offer that spikes short-term conversions but lowers ROAS may still win if customer retention is strong.

Building a variable testing matrix

Variables rarely act alone. A headline that wins with one image may flop with another. Advanced teams map creative testing as a matrix (Headline × Visual × CTA) and validate not just single winners, but winning combinations. This layered approach reduces creative fatigue, builds a scalable asset library, and compounds into durable performance gains.

15 tips for testing ad creatives

Ad creative testing works when it feels like a system. HubSpot’s 2025 Marketing Trends report shows short-form video is now the highest-ROI format for many marketers, but without a disciplined process, even great formats underperform. These steps guide you through setup, execution, and scaling so your tests produce proof you can trust.

1. Begin with a clear hypothesis

Write down the exact question you’re testing. For example: “Will urgency-based headlines lift CTR compared to descriptive ones?” This keeps your experiment focused and prevents wasted impressions.

2. Set up one variable at a time

If you’re running an A/B test on Meta Ads, change only one thing — like the headline or thumbnail — so results are easy to interpret. For larger campaigns with higher traffic, you can move to a multivariate creative matrix to see how elements interact, but start simple.

3. Match method to traffic volume

Choose A/B testing for smaller campaigns, multivariate when you have 50k+ impressions per variant, and split test audiences when you want to see how creative plays across regions or demographics. Using the wrong method creates noise instead of clarity.

4. Prioritize high-impact variables first

Test elements that shift results most — hooks, visuals, and offers. Button colors or minor tweaks can wait. Early wins usually come from the pieces that decide whether people stop scrolling at all.

5. Align tests with funnel stage

At the top of the funnel, test hooks and formats to capture attention. In the middle, focus on CTAs and offers to guide action. At the bottom, refine incentives that nudge warm leads across the line.

6. Test audiences when creative looks flat

If multiple creatives perform about the same, it may not be the ad — it may be who’s seeing it. Run a split test audience check to see whether targeting, not design, is the friction point.

7. Give each variant enough data

Don’t declare a winner after a day. Let each ad gather several thousand impressions or enough conversions to reach 95% confidence. On Meta Ads, this usually means 7–10 days of consistent delivery.

8. Respect the full learning window

Algorithms need time to stabilize. A campaign that looks “bad” on day two may normalize by day seven. Let tests run through a complete cycle before making calls.

9. Use native tools but validate externally

Meta, TikTok, and Google all offer built-in testing tools. They’re efficient, but cross-check with analytics or dashboards to confirm results — especially when tests affect big budget decisions.

10. Measure beyond CTR

A flashy headline can spike clicks but raise CPA if it pulls in unqualified traffic. Always track conversion rate, CPA, and ROAS alongside CTR so winners don’t backfire when scaled.

11.Keep a running backlog of test ideas

Treat testing like product development. Maintain a list of hypotheses, prioritize by potential impact, and move through them systematically instead of ad hoc.

12. Document results in detail

Record what you tested, the metrics, and the context (audience, platform, season). Over time, this log becomes a creative playbook you can lean on instead of reinventing tests from scratch.

13. Rotate creatives before fatigue sets in

Most ads fatigue in 2–4 weeks. Use testing to spot winners early, then rotate new variants before performance collapses. This keeps campaigns efficient without burning audiences.

14. Scale winning ads gradually

When you find a strong performer, raise spend in controlled steps — 20–30% at a time. Sudden jumps can reset learning and erase gains. Scaling smoothly protects efficiency.

15. FAQ: How Long should an ad creative test run?

Most tests need at least 7–10 days or until statistical confidence is reached. Shorter than that, and you risk false winners; much longer, and you risk wasting spend. The balance is speed plus validity — enough data to trust the result, but not so much that opportunities pass you by.

How Zeely AI can help you test your ads

Manual testing takes time — creating dozens of variations, uploading assets, and tracking results across platforms. Zeely AI compresses that workflow into minutes. According to McKinsey’s 2025 Technology Trends Outlook, 65% of senior leaders cite AI adoption as a primary driver of scale and ROI. Zeely translates that trend into a practical toolkit for ad creative testing.

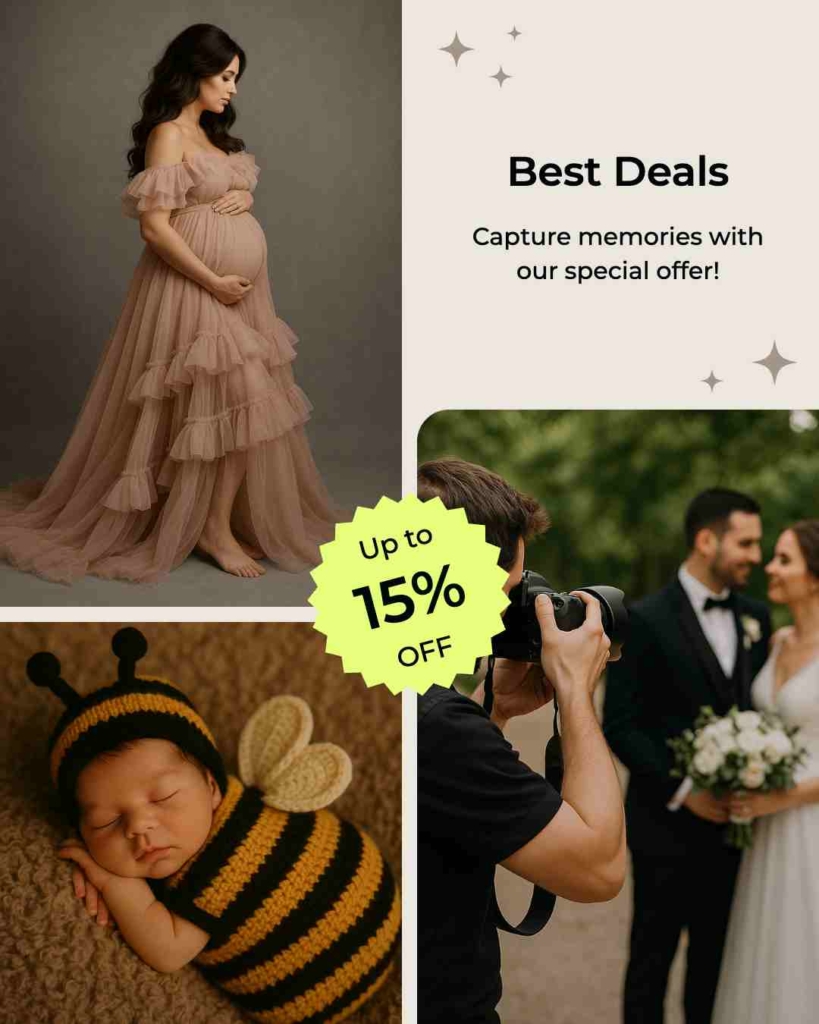

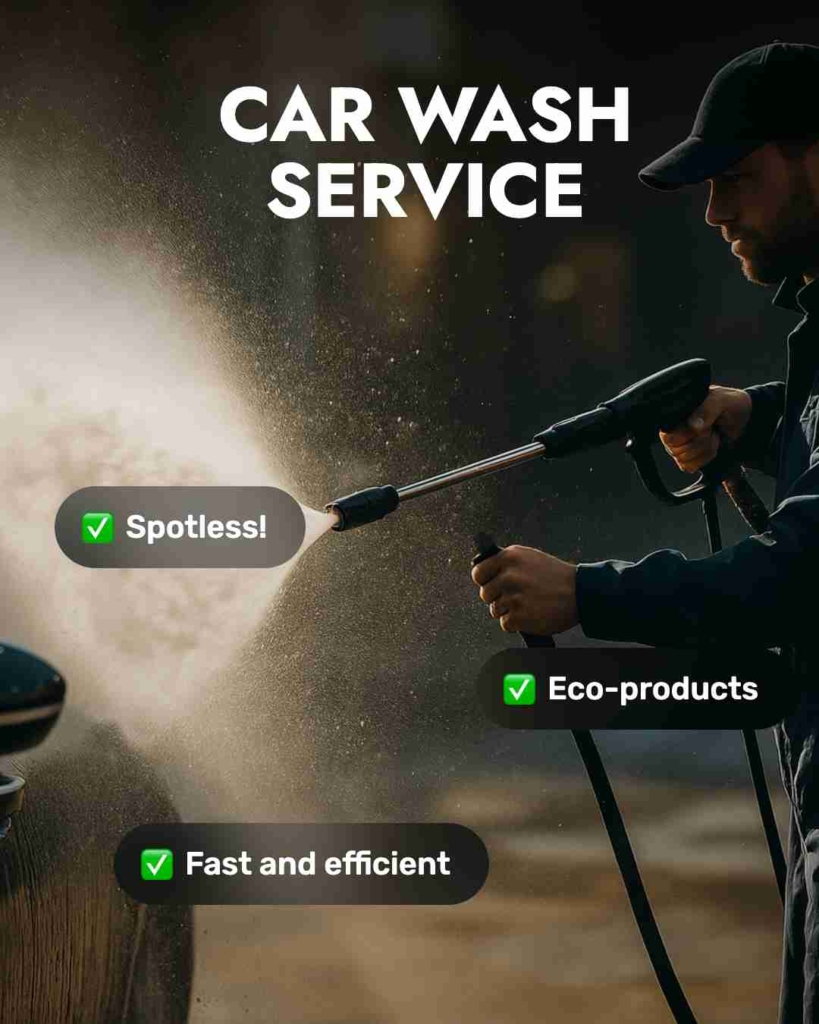

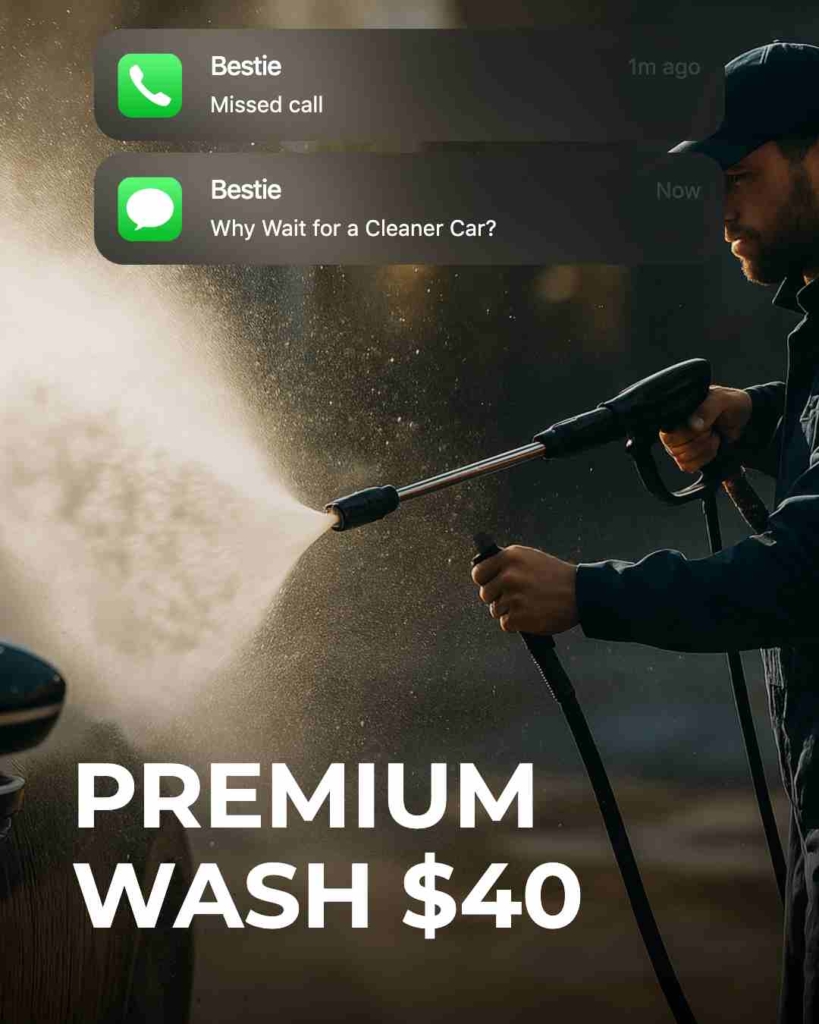

Static & video creative generation

With Zeely you can generate both static ads and video ads by simply pasting a product link. The platform builds headlines, hooks, images, and CTAs automatically.

- Static ads: Create dozens of creative variants in one click. Features include quick generation, batch creation, and platform-ready export

- Video ads: AI Video Maker delivers vertical, short-form videos in minutes. Users can add AI-generated voiceovers, captions, music, and hooks. These can then be tested across Meta Ads, TikTok, and Instagram.

Campaign-level testing features

Zeely doesn’t just create ads, it helps you test them:

- Bulk variants: Batch Mode generates multiple ad versions at once so you can test hooks, visuals, and CTAs in parallel

- Experiment templates: Structured creative templates make A/B testing smoother. You can swap variables systematically without starting from scratch

- Cross-platform readiness: Creative outputs are formatted for Meta Ads, TikTok, and YouTube Shorts, making it simple to run comparative tests

Workflow in practice

- Add product link: Zeely scrapes your page to auto-generate creative drafts

- Generate variants: Static, video, or both — with different hooks, visuals, or CTAs

- Run tests: Push variants to Meta Ads or TikTok and run A/B or multivariate tests

- Track & scale: Identify top performers, rotate creatives before fatigue, and scale budget into winners

The bigger advantage

The more variations you can produce and validate, the faster you find winners. McKinsey notes that companies investing in AI-assisted creative operations scale campaigns 2–3x faster while maintaining ROI discipline. Zeely brings that enterprise-level efficiency to everyday advertisers giving small shops and growth brands the ability to test like pros.

Zeely AI was built to make that system faster, easier, and more accessible. By generating bulk creative variants, auto-tagging tests, and formatting ads for every platform, it turns testing from a time sink into a growth driver.

If you’re ready to run smarter experiments and find your next winning creative, try Zeely AI and see how quickly testing becomes second nature.

Also recommended