Stop guessing, start winning: 7 tips for ad creative testing

What if you could know exactly which ad will convert before spending a dollar? Here are seven proven ways to master ad creative testing.

If you ever wonder why your advertising is not working, it is worth testing the materials. Perhaps they are not interesting to the audience or even irritate them. To find out, it is necessary to conduct ad creative testing.

Advertising costs are always included in the company’s budget. Agree, it is unpleasant when money is spent and there is no result. According to Statista, by 2027, advertising costs in the world will reach 870.85 billion dollars. Your company’s figures will be lower, but it will be a shame if the budget is wasted.

If your ads aren’t generating revenue, it doesn’t mean your target audience is ignoring you. Your creative may simply miss the mark. By testing variations, you learn which elements spark curiosity and lead to actual conversions. Ad creative testing is a data-driven process. It shows how design and messaging affect user engagement. Channel or audience testing focuses on who sees your ads.

Creative testing, by contrast, examines the look and feel of those ads. It helps pinpoint the headlines, images, or formats that drive the best ROI. Let’s break down what’s ad creative testing, how it works, and best practices.

Overview of ad creative testing

If you have launched an ad but the number of clicks on it is low, we recommend trying ad creative testing. It’s a data-backed way of comparing elements, like headlines, visuals, or calls to action, to see which version captivates your audience.

Unlike channel or audience tests, which focus on where and to whom your ads appear, creative testing zeroes in on what they see. This process doesn’t just validate ideas. It also helps you choose the best medium for your campaign and scale your business. Skip it, and you risk wasted ad spend, stale messaging, and overlooked growth opportunities.

Want a real example? An ecommerce store selling fitness gear tested two different ad visuals on Instagram. One ad showed just the product clearly, while the other featured an athlete using it. After 10 days and a budget of just $150, the athlete-focused visual drove 35% more sales, proving small, data-driven tweaks can make a big impact.

| Ad variation | Impressions | Clicks | Click-through rate | Conversions | Conversion rate | Cost per conversion | Lift vs. Baseline |

| Product — only image | 11,500 | 805 | 7.00% | 40 | 4.96% | $1.88 | – |

| Athlete using product | 12,200 | 1,038 | 8.51% | 54 | 5.49% | $1.39 | +35% |

Even a small budget can fuel meaningful tests if you focus on one variable at a time. Ready to see how it all works? Let’s break down the steps.

How it works

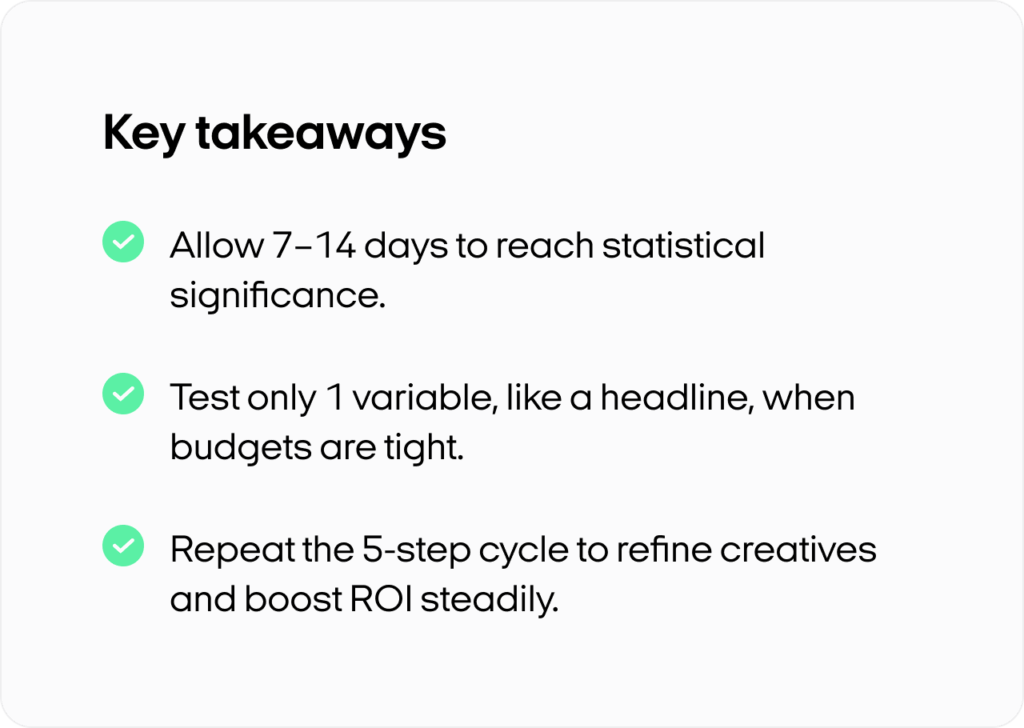

Ad creative testing usually follows five straightforward steps:

- Determine your primary metrics — click-through rate, cost per acquisition. If your budget is tight, test only one variable to keep results clear

- Decide which parts to swap — headlines, visuals, or calls to action — while maintaining a control version for accurate comparisons

- Upload each creative to Google Ads or Facebook Ads Manager and allow at least 7–14 days, or enough impressions, to reach statistical significance. Note that multivariate testing requires more traffic

- Monitor indicators like CTR, conversion rate, or bounce rate. Avoid ending the test prematurely; allow enough time to confirm real trends over short-lived spikes

- Measure each variant against the control. Keep what works, drop what doesn’t, and document each proven success so you can build on it in the next round

By following this cycle, you switch guesses for evidence-based insights. Each test refines your ad creative a little more, saving you budget and boosting results.

Whether you’re running a small local campaign or a global digital advertising strategy, these steps let you adapt quickly and stay ahead of ad fatigue — all while steadily increasing your ROI.

Ad creative testing: importance for business

If your ad has ever failed even though you expected it to succeed, you should learn why ad creative testing is important. Instead of guessing which headline or image might work, you run structured checks to see what actually resonates. By focusing on hard data, you avoid wasted spend and refine each campaign’s performance.

Budget optimization

Compare headlines, visuals, or calls to action, and you’ll spot the version that converts. That insight protects your budget. Widespread use of customer analytics can increase sales by almost three times, according to McKinsey. It might be worth using this data when testing ads.

Audience insights

Your audience isn’t one-size-fits-all. Some respond to playful copy, while others prefer direct messaging. Testing reveals these nuances so you can match each ad to its right segment.

In eCommerce, bold visuals might spark a surge in clicks. In B2B, a clear, benefits-focused headline could seal the deal. That’s how you turn broad targeting into genuine engagement.

Preventing ad fatigue

A powerful ad eventually grows stale if your audience sees it too often. Keep an eye on CTR or frequency to spot sliding engagement — signs of ad fatigue. Refresh your headline or swap an image before your results dip. Simple tweaks can re-energize your campaign and protect ROI.

Data-driven gains

Every test teaches you something new. Maybe a “Shop Now” button drives 15% more clicks than “Buy Now.” Log that success, refine your next ad, and watch each small win stack up.

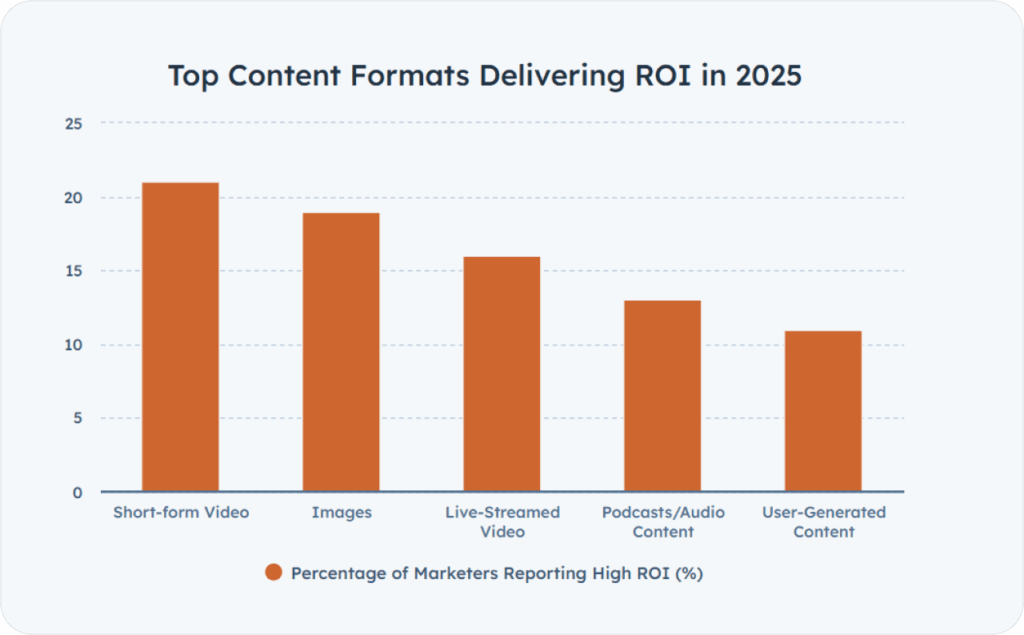

In the end, creative testing spares you from costly guesses and keeps your campaigns evolving over time. When it comes to advertising creatives, according to HubSpot, 21% of marketers say short videos have a high ROI.

Methods for testing ad creatives

Not sure which testing method suits your goals or budget? Each approach offers different benefits, whether you’re fine-tuning a single headline or validating a bold new concept.

By choosing the right technique for each funnel stage and audience size, you’ll make data-driven decisions and boost ROI across your digital ads.

A/B testing

A/B testing compares two versions of an ad to see which one performs better. You might change only the headline, CTA text, or color scheme. Both ads run at the same time, so you can track click-through rates and conversions to find the winning element.

It’s ideal for campaigns focused on conversion where small changes can have a big impact. If you can reach about 5,000–10,000 impressions per variant, you’ll likely gather enough data to confirm a statistically significant winner.

Want a real example? At Vestaire, the influencer marketing agency invited eight influencers to craft social media content using specific calls to action that matched the brand’s goals. Each influencer had plenty of creative freedom, leading to a wide range of posts — photos, short videos, and personal stories.

After collecting performance metrics on everything they produced, the agency relied on A/B testing to identify the most effective content. Finally, they promoted these top-performing pieces with paid ads, ensuring the brand’s message reached its target audience with maximum impact.

Multivariate testing

Multivariate testing allows you to optimize multiple elements together. Unlike A/B testing, which isolates one variable, multivariate testing looks at several factors. This approach reveals how these pieces interact, making it valuable for more advanced testing scenarios.

You’ll need enough traffic — often 20,000+ impressions across various combinations — to reach reliable conclusions, but the payoff can be significant. When you’re juggling multiple potential tweaks, a single campaign can uncover which set of variables drives the most conversions.

Usability testing

Usability testing digs into how people actually engage with your ads. Instead of relying solely on clicks, you watch or interview real users as they browse your creative or landing page.

This hands-on feedback can highlight confusing copy, cluttered layouts, or hidden calls to action. Even five user sessions can reveal consistent issues you can fix before rolling out a campaign at scale.

Concept testing

Concept testing evaluates early-stage ideas — such as a new brand theme, bold visuals, or experimental ad format — without committing a major budget. You might run limited, low-cost ads or show mock-ups to a focus group.

This approach helps you decide if the concept resonates or needs a pivot before a full launch. It’s especially useful at the top of the funnel, or whenever you’re introducing a fresh look and feel.

Choose the right method

A quick decision matrix

| A/B testing | Multivariate testing | Usability testing | Concept testing | |

| Best for | Single-element tweaks | Multiple changes at once | Interactive or UX-heavy ads | Validating bold or unproven ideas early |

| Funnel stage | MOFU/BOFU | MOFU/BOFU with high traffic | Awareness/consideration | Any stage |

| Traffic needed | 5,000–10,000 impressions | 20,000+ impressions | 5–7 sessions | Low to moderate |

| Complexity | Low | Medium-high | Moderate, requires user feedback tools | Low-medium |

If you’re only changing one element — like a headline — A/B testing gets results fast. When multiple elements might affect performance, multivariate testing uncovers the optimal combo.

Usability testing adds qualitative insight by showing why an ad engages people. Finally, concept testing lets you validate a whole creative vision before you commit resources.

Set up your ad creative test

Ever wonder why some ads perform better at the top of your funnel than at the bottom? Many marketers still gamble on untested ads, missing out on proven ways to boost conversion rates. Below is a step-by-step guide to help you launch tests that uncover what truly drives performance.

Funnel-stage matrix for KPIs

- TOFU: Impressions, brand-lift surveys, click-through rate

- MOFU: Cost per lead, sign-up rate, remarketing CTR

- BOFU: Cost per acquisition, purchase rate, average order value

Quick tip: As of April 2024, Instagram had 2 billion monthly active users. 32% of the target audience is aged 18 to 24. This is worth considering when testing ads on this platform.

Define objectives and KPIs

Determine what you’re trying to achieve — whether it’s building brand awareness, generating leads, or closing sales. This focus ensures that your creative testing aligns with your business’s real-world needs. For top-of-funnel campaigns, you might measure impressions or brand sentiment. At the bottom, cost per acquisition or revenue-based KPIs matter more.

Choose the testing method

Firstly, pick the right approach. A/B testing is simpler: you change one element while everything else stays the same. It’s ideal for smaller campaigns or when you need quick, clear data.

Multivariate testing alters multiple components in different combos to see which set drives the best performance metrics. You’ll need enough traffic, often tens of thousands of impressions, to ensure statistical significance for multivariate, but it can uncover synergy effects you’d miss otherwise.

Useful tip: For TOFU brand-lift goals, pair A/B or multivariate with short user polls. This checks if your audience actually perceives the message you’re testing.

Establish control creatives and variations

Start with a control creative — your original ad. Then create clearly labeled variations that isolate the elements you want to test. In an A/B scenario, maybe you only swap the CTA text.

If you’re testing multiple variables, build distinct sets that cycle through each possibility. Stay consistent with brand guidelines so you can trace changes back to a specific tweak, not a random design shift.

Determine sample size and timeline

Use a sample size calculator to figure out your impression thresholds. If you only get 1,000 daily impressions, budget enough time to let each variant reach a conclusive result.

If you’re testing brand recall, consider how many responses or poll completions you need before deciding. Ending a test too soon often yields false positives or incomplete insights.

Tip: If you see early spikes, resist the urge to call a winner at day two. Let your test run until you meet or exceed your planned thresholds.

Launch, monitor, and analyze

Once live, track performance metrics daily via Google Analytics, Facebook Ads Manager, or a tool like Zeely AI. Keep an eye on CTR, cost per lead, or conversions — whatever you chose initially. If the test is for brand awareness, watch ad recall lifts or brand sentiment surveys.

Don’t stop mid-test unless there’s a major budget or brand risk. When you reach your sample size or timeline, review which variant outperformed the rest and note any surprising data.

Mini-pitfall: Watch out for conflicting KPIs. High CTR but low conversions might mean your ad is catchy but not persuasive. Decide which metric to prioritize based on your funnel stage.

Common pitfalls & troubleshooting

Even well-planned ad creative tests can stumble on a few predictable hurdles. Here are some common pitfalls to watch out for and how to address them:

Inconclusive results

You may not have run the test long enough or used enough traffic. Extend the timeframe or combine variants for more data

Conflicting KPIs

If one version wins on CTR but loses on conversions, decide which metric aligns best with your funnel goals

Too many changes at once

In A/B tests, stick to one main variable per variant. For deeper multi-element tests, switch to multivariate with enough impressions

Low traffic

You might need to pool audiences or run the test longer, ensuring each ad gets enough exposure

Best practices & tips for creative testing

Some experts say that a structured creative testing framework can lift conversions by 30%. By fine-tuning a few core practices, you’ll see stronger returns on ad spend at every funnel stage, from top-of-funnel awareness to bottom-of-funnel direct conversions.

Leverage historical data

Your past campaign data is a goldmine of past campaign insights and performance patterns. If a short CTA boosted clicks last season or a bright color scheme worked in a summer sale, revive those tactics in new tests. By mapping your historical data, you skip repeating old mistakes and build on proven wins.

Test one variable at a time

Changing several elements in one go muddles results. Embrace isolation of variables — focus on a single tweak at a time. This clarity yields actionable insights, so if conversions jump 10%, you’ll know it’s thanks to the new headline, not something else.

Only then can you move on to developing multiple concepts. According to Shopify:

“Creating multiple creative concepts allows you to maximize your experimentation and get the most out of your testing to optimize your marketing performance.”

Allow time for statistical significance

Don’t call a winner after just one day. A truly data-driven test needs enough test duration — often a week or two — to gather reliable insights. If you bail early, you could crown a “winner” that’s just riding a fluke surge. Use a sample size calculator to gauge impressions or conversions needed before stopping.

Document results and learnings

Recording each variant and outcome in a testing log is crucial. Include details like overall documentation, audience segment, and creative changes. Over time, you’ll see patterns that drive continuous improvement. That’s how you skip re-testing failed ideas and replicate your best hits.

Use automation tools

Manually juggling 20 or 30 ads is tough. An AI ad generator, like Zeely AI, or other automated solutions can rotate variations, track performance, and identify early champions. This scalable testing saves time and helps catch subtle synergies, like a specific CTA paired with a particular image, faster than manual reviews.

Test across audience segments

Not all ads resonate with every group. Lean into demographic testing, targeting, or geographic segmentation to see how each audience reacts to your creative. A cheeky tagline might thrill younger shoppers but alienate older ones. Tailor your ads once you see who likes short copy vs. who needs deep details.

Monitor competitors & adjust

Keep an eye on competitor insights and emerging market trends. If rivals shift to user-generated content or short videos, see if those angles fit your brand. Also watch out for responsive adjustments when signs of ad fatigue appear — like a dipping CTR at a stable frequency. Refresh headlines or visuals before viewers tune out completely.

Common pitfalls

Inconclusive results can occur if you haven’t run the test long enough or if no variant stands out; consider extending the timeframe or combining smaller segments for more data. Conflicting KPIs, such as a high CTR but low conversions, require deciding which metric is most crucial for your funnel stage. Overreliance on automation may lead AI to select a “winning” ad that lowers lead quality, so always cross-check results manually. Finally, monitor for ad fatigue; if CTR steadily drops, it could mean viewers have seen the creative too often, so rotate your ads to keep them fresh.

Zeely AI: Automate ad creative testing

Tired of spending hours building ad creatives from scratch? Zeely AI streamlines automated ad creative testing by drastically cutting production time. Need a quick video ad? You’ll set it up in just 3 minutes, then let Zeely AI handle 5-6 minutes of rendering. Prefer a static ad? Create one in 1 minute and spend 1–5 minutes refining it. In total, you can launch an entire ad campaign up to 30 minutes — including setup, rendering, and final tweaks.

This speed means you’ll test more variants, catch winning combos faster, and avoid the guesswork of manual ad creation. Whether you’re optimizing top-of-funnel awareness or fine-tuning bottom-of-funnel conversions, Zeely AI offers the automation you need for hassle-free creative testing that delivers real returns.

Conclusion & next steps

Still unsure how to wrap up your ad creative testing roadmap? Structured tests can lift conversions, but success relies on consistent improvements. Below is a final recap with added depth, real-world context, and a glance at potential pitfalls — so you’ll stay data-driven and avoid common stumbling blocks.

Key takeaways

Ad creative testing isn’t a one-off task. It’s an ongoing testing best practices cycle that spotlights your best copy, design, and audience fit. When you isolate variables, track results, and refresh creatives regularly, you protect yourself from ad fatigue and maintain strong returns on your ad spend.

Action steps in a nutshell

- Pinpoint old campaign wins for quick gains

- If you’re swamped with manual tasks, an automated platform can free you to dig into data-driven insights rather than toggling settings

- Tailor messages to each group. This approach avoids wasted impressions and zeroes in on who’s most likely to convert

- Keep a log of each test setup, metrics, and key findings. Next time, you’ll skip repeated errors and expand on proven tactics

- A brand that pivots to short-form video or user-generated content might signal a market shift. Adjust your creative if you see user interest shifting

Ready to implement?

Gather your historical data, execute a clear testing framework, and stay flexible as market trends evolve. Each new discovery becomes part of your creative testing roadmap. By testing strategically and reacting to fresh insights, you’ll keep your ROI moving upward in a fast-changing digital advertising world. Try Zeely AI to test your creatives and boost your campaign!

Also recommended